¶ 13.1

Introduction into searching with Manticore Search

Searching is a core feature of Manticore Search. You can:

General syntax

SQL:

SELECT ... [OPTION <optionname>=<value> [ , ... ]]

HTTP:

POST /search

{

"index" : "index_name",

"options":

{

...

}

}

The MATCH clause allows for full-text searches in text fields. The input query string is tokenized using the same settings applied to the text during indexing. In addition to the tokenization of input text, the query string supports a number of full-text operators that enforce various rules on how keywords should provide a valid match.

Full-text match clauses can be combined with attribute filters as an AND boolean. OR relations between full-text matches and attribute filters are not supported.

The match query is always executed first in the filtering process, followed by the attribute filters. The attribute filters are applied to the result set of the match query. A query without a match clause is called a fullscan.

There must be at most one MATCH() in the SELECT clause.

Using the full-text query syntax, matching is performed across all indexed text fields of a document, unless the expression requires a match within a field (like phrase search) or is limited by field operators.

SQL

SELECT * FROM myindex WHERE MATCH('cats|birds');

The SELECT statement uses a MATCH clause, which must come after WHERE, for performing full-text searches. MATCH() accepts an input string in which all full-text operators are available.

SQL

SELECT * FROM myindex WHERE MATCH('"find me fast"/2');

+------+------+----------------+

| id | gid | title |

+------+------+----------------+

| 1 | 11 | first find me |

| 2 | 12 | second find me |

+------+------+----------------+

2 rows in set (0.00 sec)

MATCH with filters

An example of a more complex query using MATCH with WHERE filters.

SELECT * FROM myindex WHERE MATCH('cats|birds') AND (`title`='some title' AND `id`=123);

HTTP JSON

Full-text matching is available in the /search endpoint and in HTTP-based clients. The following clauses can be used for performing full-text matches:

match

"match" is a simple query that matches the specified keywords in the specified fields.

"query":

{

"match": { "field": "keyword" }

}

You can specify a list of fields:

"match":

{

"field1,field2": "keyword"

}

Or you can use _all or * to search all fields.

You can search all fields except one using "!field":

"match":

{

"!field1": "keyword"

}

By default, keywords are combined using the OR operator. However, you can change that behavior using the "operator" clause:

"query":

{

"match":

{

"content,title":

{

"query":"keyword",

"operator":"or"

}

}

}

"operator" can be set to "or" or "and".

match_phrase

"match_phrase" is a query that matches the entire phrase. It is similar to a phrase operator in SQL. Here's an example:

"query":

{

"match_phrase": { "_all" : "had grown quite" }

}

query_string

"query_string" accepts an input string as a full-text query in MATCH() syntax.

"query":

{

"query_string": "Church NOTNEAR/3 street"

}

match_all

"match_all" accepts an empty object and returns documents from the table without performing any attribute filtering or full-text matching. Alternatively, you can just omit the query clause in the request which will have the same effect.

"query":

{

"match_all": {}

}

Combining full-text filtering with other filters

All full-text match clauses can be combined with must, must_not, and should operators of a JSON bool query.

Examples:

match

// POST /search -d

{

"index" : "hn_small",

"query":

{

"match":

{

"*" : "find joe"

}

},

"_source": ["story_author","comment_author"],

"limit": 1

}

{

"took" : 3,

"timed_out" : false,

"hits" : {

"hits" : [

{

"_id" : "668018",

"_score" : 3579,

"_source" : {

"story_author" : "IgorPartola",

"comment_author" : "joe_the_user"

}

}

],

"total" : 88063,

"total_relation" : "eq"

}

}

match_phrase

POST /search

-d

'{

"index" : "hn_small",

"query":

{

"match_phrase":

{

"*" : "find joe"

}

},

"_source": ["story_author","comment_author"],

"limit": 1

}'

{

"took" : 3,

"timed_out" : false,

"hits" : {

"hits" : [

{

"_id" : "807160",

"_score" : 2599,

"_source" : {

"story_author" : "rbanffy",

"comment_author" : "runjake"

}

}

],

"total" : 2,

"total_relation" : "eq"

}

}

query_string

POST /search

-d

'{ "index" : "hn_small",

"query":

{

"query_string": "@comment_text \"find joe fast \"/2"

},

"_source": ["story_author","comment_author"],

"limit": 1

}'

{

"took" : 3,

"timed_out" : false,

"hits" : {

"hits" : [

{

"_id" : "807160",

"_score" : 2566,

"_source" : {

"story_author" : "rbanffy",

"comment_author" : "runjake"

}

}

],

"total" : 1864,

"total_relation" : "eq"

}

}

PHP

$search = new Search(new Client());

$result = $search->('@title find me fast');

foreach($result as $doc)

{

echo 'Document: '.$doc->getId();

foreach($doc->getData() as $field=>$value)

{

echo $field.': '.$value;

}

}

Document: 1

title: first find me fast

gid: 11

Document: 2

title: second find me fast

gid: 12

Python

Python

searchApi.search({"index":"hn_small","query":{"query_string":"@comment_text \"find joe fast \"/2"}, "_source": ["story_author","comment_author"], "limit":1})

{'aggregations': None,

'hits': {'hits': [{'_id': '807160',

'_score': 2566,

'_source': {'comment_author': 'runjake',

'story_author': 'rbanffy'}}],

'max_score': None,

'total': 1864,

'total_relation': 'eq'},

'profile': None,

'timed_out': False,

'took': 2,

'warning': None}

javascript

javascript

res = await searchApi.search({"index":"hn_small","query":{"query_string":"@comment_text \"find joe fast \"/2"}, "_source": ["story_author","comment_author"], "limit":1});

{

took: 1,

timed_out: false,

hits: {

exports: {

total: 1864,

total_relation: 'eq',

hits: [

{

_id: '807160',

_score: 2566,

_source: { story_author: 'rbanffy', comment_author: 'runjake' }

}

]

}

}

}

java

Java

query = new HashMap<String,Object>();

query.put("query_string", "@comment_text \"find joe fast \"/2");

searchRequest = new SearchRequest();

searchRequest.setIndex("hn_small");

searchRequest.setQuery(query);

searchRequest.addSourceItem("story_author");

searchRequest.addSourceItem("comment_author");

searchRequest.limit(1);

searchResponse = searchApi.search(searchRequest);

class SearchResponse {

took: 1

timedOut: false

aggregations: null

hits: class SearchResponseHits {

maxScore: null

total: 1864

totalRelation: eq

hits: [{_id=807160, _score=2566, _source={story_author=rbanffy, comment_author=runjake}}]

}

profile: null

warning: null

}

C#

C#

object query = new { query_string="@comment_text \"find joe fast \"/2" };

var searchRequest = new SearchRequest("hn_small", query);

searchRequest.Source = new List<string> {"story_author", "comment_author"};

searchRequest.Limit = 1;

SearchResponse searchResponse = searchApi.Search(searchRequest);

class SearchResponse {

took: 1

timedOut: false

aggregations: null

hits: class SearchResponseHits {

maxScore: null

total: 1864

totalRelation: eq

hits: [{_id=807160, _score=2566, _source={story_author=rbanffy, comment_author=runjake}}]

}

profile: null

warning: null

}

TypeScript

TypeScript

res = await searchApi.search({

index: 'test',

query: { query_string: "test document 1" },

"_source": ["content", "title"],

limit: 1

});

{

took: 1,

timed_out: false,

hits:

exports {

total: 5,

total_relation: 'eq',

hits:

[ { _id: '1',

_score: 2566,

_source: { content: 'This is a test document 1', title: 'Doc 1' }

}

]

}

}

Go

Go

searchRequest := manticoresearch.NewSearchRequest("test")

query := map[string]interface{} {"query_string": "test document 1"}

searchReq.SetSource([]string{"content", "title"})

searchReq.SetLimit(1)

resp, httpRes, err := search.SearchRequest(*searchRequest).Execute()

{

"hits": {

"hits": [

{

"_id": "1",

"_score": 2566,

"_source": {

"content": "This is a test document 1",

"title": "Doc 1"

}

}

],

"total": 5,

"total_relation": "eq"

},

"timed_out": false,

"took": 0

}

The query string can include specific operators that define the conditions for how the words from the query string should be matched.

Boolean operators

AND operator

An implicit logical AND operator is always present, so "hello world" implies that both "hello" and "world" must be found in the matching document.

Note: There is no explicit AND operator.

OR operator

The logical OR operator | has a higher precedence than AND, so looking for cat | dog | mouse means looking for (cat | dog | mouse) rather than (looking for cat) | dog | mouse.

Note: There is no operator OR. Please use | instead.

MAYBE operator

The MAYBE operator functions similarly to the | operator, but it does not return documents that match only the right subtree expression.

Negation operator

hello -world

hello !world

The negation operator enforces a rule for a word to not exist.

Queries containing only negations are not supported by default. To enable, use the server option not_terms_only_allowed.

Field search operator

The field limit operator restricts subsequent searches to a specified field. By default, the query will fail with an error message if the given field name does not exist in the searched table. However, this behavior can be suppressed by specifying the @@relaxed option at the beginning of the query:

@@relaxed @nosuchfield my query

This can be useful when searching through heterogeneous tables with different schemas.

Field position limits additionally constrain the search to the first N positions within a given field (or fields). For example, @body [50] hello will not match documents where the keyword hello appears at position 51 or later in the body.

Multiple-field search operator:

@(title,body) hello world

Ignore field search operator (ignores any matches of 'hello world' from the 'title' field):

Ignore multiple-field search operator (if there are fields 'title', 'subject', and 'body', then @!(title) is equivalent to @(subject,body)):

@!(title,body) hello world

All-field search operator:

Phrase search operator

The phrase operator mandates that the words be adjacent to each other.

The phrase search operator can incorporate a match any term modifier. Within the phrase operator, terms are positionally significant. When the 'match any term' modifier is employed, the positions of the subsequent terms in that phrase query will be shifted. As a result, the 'match any' modifier does not affect search performance.

"exact * phrase * * for terms"

Proximity search operator

Proximity distance is measured in words, accounting for word count, and applies to all words within quotes. For example, the query "cat dog mouse"~5 indicates that there must be a span of fewer than 8 words containing all 3 words. Therefore, a document with CAT aaa bbb ccc DOG eee fff MOUSE will not match this query, as the span is exactly 8 words long.

Quorum matching operator

"the world is a wonderful place"/3

The quorum matching operator introduces a type of fuzzy matching. It will match only those documents that meet a given threshold of specified words. In the example above ("the world is a wonderful place"/3), it will match all documents containing at least 3 of the 6 specified words. The operator is limited to 255 keywords. Instead of an absolute number, you can also provide a value between 0.0 and 1.0 (representing 0% and 100%), and Manticore will match only documents containing at least the specified percentage of given words. The same example above could also be expressed as "the world is a wonderful place"/0.5, and it would match documents with at least 50% of the 6 words.

Strict order operator

The strict order operator (also known as the "before" operator) matches a document only if its argument keywords appear in the document precisely in the order specified in the query. For example, the query black << cat will match the document "black and white cat" but not the document "that cat was black". The order operator has the lowest priority. It can be applied to both individual keywords and more complex expressions. For instance, this is a valid query:

(bag of words) << "exact phrase" << red|green|blue

raining =cats and =dogs

="exact phrase"

The exact form keyword modifier matches a document only if the keyword appears in the exact form specified. By default, a document is considered a match if the stemmed/lemmatized keyword matches. For instance, the query "runs" will match both a document containing "runs" and one containing "running", because both forms stem to just "run". However, the =runs query will only match the first document. The exact form operator requires the index_exact_words option to be enabled.

Another use case is to prevent expanding a keyword to its *keyword* form. For example, with index_exact_words=1 + expand_keywords=1/star, bcd will find a document containing abcde, but =bcd will not.

As a modifier affecting the keyword, it can be used within operators such as phrase, proximity, and quorum operators. Applying an exact form modifier to the phrase operator is possible, and in this case, it internally adds the exact form modifier to all terms in the phrase.

Wildcard operators

nation* *nation* *national

Requires min_infix_len for prefix (expansion in trail) and/or suffix (expansion in head). If only prefixing is desired, min_prefix_len can be used instead.

The search will attempt to find all expansions of the wildcarded tokens, and each expansion is recorded as a matched hit. The number of expansions for a token can be controlled with the expansion_limit table setting. Wildcarded tokens can have a significant impact on query search time, especially when tokens have short lengths. In such cases, it is desirable to use the expansion limit.

The wildcard operator can be automatically applied if the expand_keywords table setting is used.

In addition, the following inline wildcard operators are supported:

? can match any single character: t?st will match test, but not teast% can match zero or one character: tes% will match tes or test, but not testing

The inline operators require dict=keywords and infixing enabled.

REGEX operator

Requires the min_infix_len or min_prefix_len and dict=keywords options to be set (which is a default).

Similarly to the wildcard operators, the REGEX operator attempts to find all tokens matching the provided pattern, and each expansion is recorded as a matched hit. Note, this can have a significant impact on query search time, as the entire dictionary is scanned, and every term in the dictionary undergoes matching with the REGEX pattern.

The patterns should adhere to the RE2 syntax. The REGEX expression delimiter is the first symbol after the open bracket. In other words, all text between the open bracket followed by the delimiter and the delimiter and the closed bracket is considered as a RE2 expression.

Please note that the terms stored in the dictionary undergo charset_table transformation, meaning that for example, REGEX may not be able to match uppercase characters if all characters are lowercased according to the charset_table (which happens by default). To successfully match a term using a REGEX expression, the pattern must correspond to the entire token. To achieve partial matching, place .* at the beginning and/or end of your pattern.

REGEX(/.{3}t/)

REGEX(/t.*\d*/)

Field-start and field-end modifier

Field-start and field-end keyword modifiers ensure that a keyword only matches if it appears at the very beginning or the very end of a full-text field, respectively. For example, the query "^hello world$" (enclosed in quotes to combine the phrase operator with the start/end modifiers) will exclusively match documents containing at least one field with these two specific keywords.

IDF boost modifier

boosted^1.234 boostedfieldend$^1.234

The boost modifier raises the word IDF score by the indicated factor in ranking scores that incorporate IDF into their calculations. It does not impact the matching process in any manner.

NEAR operator

hello NEAR/3 world NEAR/4 "my test"

The NEAR operator is a more generalized version of the proximity operator. Its syntax is NEAR/N, which is case-sensitive and does not allow spaces between the NEAR keywords, slash sign, and distance value.

While the original proximity operator works only on sets of keywords, NEAR is more versatile and can accept arbitrary subexpressions as its two arguments. It matches a document when both subexpressions are found within N words of each other, regardless of their order. NEAR is left-associative and shares the same (lowest) precedence as BEFORE.

It is important to note that one NEAR/7 two NEAR/7 three is not exactly equivalent to "one two three"~7. The key difference is that the proximity operator allows up to 6 non-matching words between all three matching words, while the version with NEAR is less restrictive: it permits up to 6 words between one and two, and then up to 6 more between that two-word match and three.

NOTNEAR operator

The NOTNEAR operator serves as a negative assertion. It matches a document when the left argument is present and either the right argument is absent from the document or the right argument is a specified distance away from the end of the left matched argument. The distance is denoted in words. The syntax is NOTNEAR/N, which is case-sensitive and does not permit spaces between the NOTNEAR keyword, slash sign, and distance value. Both arguments of this operator can be terms or any operators or group of operators.

SENTENCE and PARAGRAPH operators

all SENTENCE words SENTENCE "in one sentence"

"Bill Gates" PARAGRAPH "Steve Jobs"

The SENTENCE and PARAGRAPH operators match a document when both of their arguments are within the same sentence or the same paragraph of text, respectively. These arguments can be keywords, phrases, or instances of the same operator.

The order of the arguments within the sentence or paragraph is irrelevant. These operators function only with tables built with index_sp (sentence and paragraph indexing feature) enabled and revert to a simple AND operation otherwise. For information on what constitutes a sentence and a paragraph, refer to the index_sp directive documentation.

ZONE limit operator

ZONE:(h3,h4)

only in these titles

The ZONE limit operator closely resembles the field limit operator but limits matching to a specified in-field zone or a list of zones. It is important to note that subsequent subexpressions do not need to match within a single continuous span of a given zone and may match across multiple spans. For example, the query (ZONE:th hello world) will match the following sample document:

<th>Table 1. Local awareness of Hello Kitty brand.</th>

.. some table data goes here ..

<th>Table 2. World-wide brand awareness.</th>

The ZONE operator influences the query until the next field or ZONE limit operator, or until the closing parenthesis. It functions exclusively with tables built with zone support (refer to index_zones) and will be disregarded otherwise.

ZONESPAN limit operator

ZONESPAN:(h2)

only in a (single) title

The ZONESPAN limit operator resembles the ZONE operator but mandates that the match occurs within a single continuous span. In the example provided earlier, ZONESPAN:th hello world would not match the document, as "hello" and "world" do not appear within the same span.

¶ 13.2.3

Escaping characters in query string

Since certain characters function as operators in the query string, they must be escaped to prevent query errors or unintended matching conditions.

The following characters should be escaped using a backslash (\):

! " $ ' ( ) - / < @ \ ^ | ~

In MySQL command line client

To escape a single quote ('), use one backslash:

SELECT * FROM your_index WHERE MATCH('l\'italiano');

For the other characters in the list mentioned earlier, which are operators or query constructs, they must be treated as simple characters by the engine, with a preceding escape character.

The backslash must also be escaped, resulting in two backslashes:

SELECT * FROM your_index WHERE MATCH('r\\&b | \\(official video\\)');

To use a backslash as a character, you must escape both the backslash as a character and the backslash as the escape operator, which requires four backslashes:

SELECT * FROM your_index WHERE MATCH('\\\\ABC');

When you are working with JSON data in Manticore Search and need to include a double quote (") within a JSON string, it's important to handle it with proper escaping. In JSON, a double quote within a string is escaped using a backslash (\). However, when inserting the JSON data through an SQL query, Manticore Search interprets the backslash (\) as an escape character within strings.

To ensure the double quote is correctly inserted into the JSON data, you need to escape the backslash itself. This results in using two backslashes (\\) before the double quote. For example:

insert into tbl(j) values('{"a": "\\"abc\\""}');

Using MySQL drivers

MySQL drivers provide escaping functions (e.g., mysqli_real_escape_string in PHP or conn.escape_string in Python), but they only escape specific characters.

You will still need to add escaping for the characters from the previously mentioned list that are not escaped by their respective functions.

Because these functions will escape the backslash for you, you only need to add one backslash.

This also applies to drivers that support (client-side) prepared statements. For example, with PHP PDO prepared statements, you need to add a backslash for the $ character:

$statement = $ln_sph->prepare( "SELECT * FROM index WHERE MATCH(:match)");

$match = '\$manticore';

$statement->bindParam(':match',$match,PDO::PARAM_STR);

$results = $statement->execute();

This results in the final query SELECT * FROM index WHERE MATCH('\\$manticore');

In HTTP JSON API

The same rules for the SQL protocol apply, with the exception that for JSON, the double quote must be escaped with a single backslash, while the rest of the characters require double escaping.

When using JSON libraries or functions that convert data structures to JSON strings, the double quote and single backslash are automatically escaped by these functions and do not need to be explicitly escaped.

In clients

The new official clients (which use the HTTP protocol) utilize common JSON libraries/functions available in their respective programming languages under the hood. The same rules for escaping mentioned earlier apply.

Escaping asterisk

The asterisk (*) is a unique character that serves two purposes:

- as a wildcard prefix/suffix expander

- as an any-term modifier within a phrase search.

Unlike other special characters that function as operators, the asterisk cannot be escaped when it's in a position to provide one of its functionalities.

In non-wildcard queries, the asterisk does not require escaping, whether it's in the charset_table or not.

In wildcard queries, an asterisk in the middle of a word does not require escaping. As a wildcard operator (either at the beginning or end of the word), the asterisk will always be interpreted as the wildcard operator, even if escaping is applied.

Escaping json node names in SQL

To escape special characters in JSON nodes, use a backtick. For example:

MySQL [(none)]> select * from t where json.`a=b`=234;

+---------------------+-------------+------+

| id | json | text |

+---------------------+-------------+------+

| 8215557549554925578 | {"a=b":234} | |

+---------------------+-------------+------+

MySQL [(none)]> select * from t where json.`a:b`=123;

+---------------------+-------------+------+

| id | json | text |

+---------------------+-------------+------+

| 8215557549554925577 | {"a:b":123} | |

+---------------------+-------------+------+

How a query is interpreted

Consider this complex query example:

"hello world" @title "example program"~5 @body python -(php|perl) @* code

The full meaning of this search is:

- Locate the words 'hello' and 'world' adjacently in any field within a document;

- Additionally, the same document must also contain the words 'example' and 'program' in the title field, with up to, but not including, 5 words between them; (For instance, "example PHP program" would match, but "example script to introduce outside data into the correct context for your program" would not, as there are 5 or more words between the two terms)

- Furthermore, the same document must have the word 'python' in the body field, while excluding 'php' or 'perl';

- Finally, the same document must include the word 'code' in any field.

The OR operator takes precedence over AND, so "looking for cat | dog | mouse" means "looking for (cat | dog | mouse)" rather than "(looking for cat) | dog | mouse".

To comprehend how a query will be executed, Manticore Search provides query profiling tools to examine the query tree generated by a query expression.

Profiling the query tree in SQL

To enable full-text query profiling with an SQL statement, you must activate it before executing the desired query:

SET profiling =1;

SELECT * FROM test WHERE MATCH('@title abc* @body hey');

To view the query tree, execute the SHOW PLAN command immediately after running the query:

This command will return the structure of the executed query. Keep in mind that the 3 statements - SET profiling, the query, and SHOW - must be executed within the same session.

Profiling the query in HTTP JSON

When using the HTTP JSON protocol we can just enable "profile":true to get in response the full-text query tree structure.

{

"index":"test",

"profile":true,

"query":

{

"match_phrase": { "_all" : "had grown quite" }

}

}

The response will include a profile object containing a query member.

The query property holds the transformed full-text query tree. Each node consists of:

type: node type, which can be AND, OR, PHRASE, KEYWORD, etc.description: query subtree for this node represented as a string (in SHOW PLAN format)children: any child nodes, if presentmax_field_pos: maximum position within a field

A keyword node will additionally include:

word: the transformed keyword.querypos: position of this keyword in the query.excluded: keyword excluded from the query.expanded: keyword added by prefix expansion.field_start: keyword must appear at the beginning of the field.field_end: keyword must appear at the end of the field.boost: the keyword's IDF will be multiplied by this value.

SQL

SET profiling=1;

SELECT * FROM test WHERE MATCH('@title abc* @body hey');

SHOW PLAN \G

*************************** 1\. row ***************************

Variable: transformed_tree

Value: AND(

OR(fields=(title), KEYWORD(abcx, querypos=1, expanded), KEYWORD(abcm, querypos=1, expanded)),

AND(fields=(body), KEYWORD(hey, querypos=2)))

1 row in set (0.00 sec)

JSON

POST /search

{

"index": "forum",

"query": {"query_string": "i me"},

"_source": { "excludes":["*"] },

"limit": 1,

"profile":true

}

{

"took":1503,

"timed_out":false,

"hits":

{

"total":406301,

"hits":

[

{

"_id":"406443",

"_score":3493,

"_source":{}

}

]

},

"profile":

{

"query":

{

"type":"AND",

"description":"AND( AND(KEYWORD(i, querypos=1)), AND(KEYWORD(me, querypos=2)))",

"children":

[

{

"type":"AND",

"description":"AND(KEYWORD(i, querypos=1))",

"children":

[

{

"type":"KEYWORD",

"word":"i",

"querypos":1

}

]

},

{

"type":"AND",

"description":"AND(KEYWORD(me, querypos=2))",

"children":

[

{

"type":"KEYWORD",

"word":"me",

"querypos":2

}

]

}

]

}

}

}

PHP

$result = $index->search('i me')->setSource(['excludes'=>['*']])->setLimit(1)->profile()->get();

print_r($result->getProfile());

Array

(

[query] => Array

(

[type] => AND

[description] => AND( AND(KEYWORD(i, querypos=1)), AND(KEYWORD(me, querypos=2)))

[children] => Array

(

[0] => Array

(

[type] => AND

[description] => AND(KEYWORD(i, querypos=1))

[children] => Array

(

[0] => Array

(

[type] => KEYWORD

[word] => i

[querypos] => 1

)

)

)

[1] => Array

(

[type] => AND

[description] => AND(KEYWORD(me, querypos=2))

[children] => Array

(

[0] => Array

(

[type] => KEYWORD

[word] => me

[querypos] => 2

)

)

)

)

)

)

Python

Python

searchApi.search({"index":"forum","query":{"query_string":"i me"},"_source":{"excludes":["*"]},"limit":1,"profile":True})

{'hits': {'hits': [{u'_id': u'100', u'_score': 2500, u'_source': {}}],

'total': 1},

'profile': {u'query': {u'children': [{u'children': [{u'querypos': 1,

u'type': u'KEYWORD',

u'word': u'i'}],

u'description': u'AND(KEYWORD(i, querypos=1))',

u'type': u'AND'},

{u'children': [{u'querypos': 2,

u'type': u'KEYWORD',

u'word': u'me'}],

u'description': u'AND(KEYWORD(me, querypos=2))',

u'type': u'AND'}],

u'description': u'AND( AND(KEYWORD(i, querypos=1)), AND(KEYWORD(me, querypos=2)))',

u'type': u'AND'}},

'timed_out': False,

'took': 0}

javascript

javascript

res = await searchApi.search({"index":"forum","query":{"query_string":"i me"},"_source":{"excludes":["*"]},"limit":1,"profile":true});

{"hits": {"hits": [{"_id": "100", "_score": 2500, "_source": {}}],

"total": 1},

"profile": {"query": {"children": [{"children": [{"querypos": 1,

"type": "KEYWORD",

"word": "i"}],

"description": "AND(KEYWORD(i, querypos=1))",

"type": "AND"},

{"children": [{"querypos": 2,

"type": "KEYWORD",

"word": "me"}],

"description": "AND(KEYWORD(me, querypos=2))",

"type": "AND"}],

"description": "AND( AND(KEYWORD(i, querypos=1)), AND(KEYWORD(me, querypos=2)))",

"type": "AND"}},

"timed_out": False,

"took": 0}

java

Java

query = new HashMap<String,Object>();

query.put("query_string","i me");

searchRequest = new SearchRequest();

searchRequest.setIndex("forum");

searchRequest.setQuery(query);

searchRequest.setProfile(true);

searchRequest.setLimit(1);

searchRequest.setSort(new ArrayList<String>(){{

add("*");

}});

searchResponse = searchApi.search(searchRequest);

class SearchResponse {

took: 18

timedOut: false

hits: class SearchResponseHits {

total: 1

hits: [{_id=100, _score=2500, _source={}}]

aggregations: null

}

profile: {query={type=AND, description=AND( AND(KEYWORD(i, querypos=1)), AND(KEYWORD(me, querypos=2))), children=[{type=AND, description=AND(KEYWORD(i, querypos=1)), children=[{type=KEYWORD, word=i, querypos=1}]}, {type=AND, description=AND(KEYWORD(me, querypos=2)), children=[{type=KEYWORD, word=me, querypos=2}]}]}}

}

C#

C#

object query = new { query_string="i me" };

var searchRequest = new SearchRequest("forum", query);

searchRequest.Profile = true;

searchRequest.Limit = 1;

searchRequest.Sort = new List<Object> { "*" };

var searchResponse = searchApi.Search(searchRequest);

class SearchResponse {

took: 18

timedOut: false

hits: class SearchResponseHits {

total: 1

hits: [{_id=100, _score=2500, _source={}}]

aggregations: null

}

profile: {query={type=AND, description=AND( AND(KEYWORD(i, querypos=1)), AND(KEYWORD(me, querypos=2))), children=[{type=AND, description=AND(KEYWORD(i, querypos=1)), children=[{type=KEYWORD, word=i, querypos=1}]}, {type=AND, description=AND(KEYWORD(me, querypos=2)), children=[{type=KEYWORD, word=me, querypos=2}]}]}}

}

TypeScript

TypeScript

res = await searchApi.search({

index: 'test',

query: { query_string: 'Text' },

_source: { excludes: ['*'] },

limit: 1,

profile: true

});

{

"hits":

{

"hits":

[{

"_id": "1",

"_score": 1480,

"_source": {}

}],

"total": 1

},

"profile":

{

"query": {

"children":

[{

"children":

[{

"querypos": 1,

"type": "KEYWORD",

"word": "i"

}],

"description": "AND(KEYWORD(i, querypos=1))",

"type": "AND"

},

{

"children":

[{

"querypos": 2,

"type": "KEYWORD",

"word": "me"

}],

"description": "AND(KEYWORD(me, querypos=2))",

"type": "AND"

}],

"description": "AND( AND(KEYWORD(i, querypos=1)), AND(KEYWORD(me, querypos=2)))",

"type": "AND"

}

},

"timed_out": False,

"took": 0

}

Go

Go

searchRequest := manticoresearch.NewSearchRequest("test")

query := map[string]interface{} {"query_string": "Text"}

source := map[string]interface{} { "excludes": []string {"*"} }

searchRequest.SetQuery(query)

searchRequest.SetSource(source)

searchReq.SetLimit(1)

searchReq.SetProfile(true)

res, _, _ := apiClient.SearchAPI.Search(context.Background()).SearchRequest(*searchRequest).Execute()

{

"hits":

{

"hits":

[{

"_id": "1",

"_score": 1480,

"_source": {}

}],

"total": 1

},

"profile":

{

"query": {

"children":

[{

"children":

[{

"querypos": 1,

"type": "KEYWORD",

"word": "i"

}],

"description": "AND(KEYWORD(i, querypos=1))",

"type": "AND"

},

{

"children":

[{

"querypos": 2,

"type": "KEYWORD",

"word": "me"

}],

"description": "AND(KEYWORD(me, querypos=2))",

"type": "AND"

}],

"description": "AND( AND(KEYWORD(i, querypos=1)), AND(KEYWORD(me, querypos=2)))",

"type": "AND"

}

},

"timed_out": False,

"took": 0

}

In some instances, the evaluated query tree may significantly differ from the original one due to expansions and other transformations.

SQL

SET profiling=1;

SELECT id FROM forum WHERE MATCH('@title way* @content hey') LIMIT 1;

SHOW PLAN;

Query OK, 0 rows affected (0.00 sec)

+--------+

| id |

+--------+

| 711651 |

+--------+

1 row in set (0.04 sec)

+------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Variable | Value |

+------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| transformed_tree | AND(

OR(

OR(

AND(fields=(title), KEYWORD(wayne, querypos=1, expanded)),

OR(

AND(fields=(title), KEYWORD(ways, querypos=1, expanded)),

AND(fields=(title), KEYWORD(wayyy, querypos=1, expanded)))),

AND(fields=(title), KEYWORD(way, querypos=1, expanded)),

OR(fields=(title), KEYWORD(way*, querypos=1, expanded))),

AND(fields=(content), KEYWORD(hey, querypos=2))) |

+------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

1 row in set (0.00 sec)

JSON

POST /search

{

"index": "forum",

"query": {"query_string": "@title way* @content hey"},

"_source": { "excludes":["*"] },

"limit": 1,

"profile":true

}

{

"took":33,

"timed_out":false,

"hits":

{

"total":105,

"hits":

[

{

"_id":"711651",

"_score":2539,

"_source":{}

}

]

},

"profile":

{

"query":

{

"type":"AND",

"description":"AND( OR( OR( AND(fields=(title), KEYWORD(wayne, querypos=1, expanded)), OR( AND(fields=(title), KEYWORD(ways, querypos=1, expanded)), AND(fields=(title), KEYWORD(wayyy, querypos=1, expanded)))), AND(fields=(title), KEYWORD(way, querypos=1, expanded)), OR(fields=(title), KEYWORD(way*, querypos=1, expanded))), AND(fields=(content), KEYWORD(hey, querypos=2)))",

"children":

[

{

"type":"OR",

"description":"OR( OR( AND(fields=(title), KEYWORD(wayne, querypos=1, expanded)), OR( AND(fields=(title), KEYWORD(ways, querypos=1, expanded)), AND(fields=(title), KEYWORD(wayyy, querypos=1, expanded)))), AND(fields=(title), KEYWORD(way, querypos=1, expanded)), OR(fields=(title), KEYWORD(way*, querypos=1, expanded)))",

"children":

[

{

"type":"OR",

"description":"OR( AND(fields=(title), KEYWORD(wayne, querypos=1, expanded)), OR( AND(fields=(title), KEYWORD(ways, querypos=1, expanded)), AND(fields=(title), KEYWORD(wayyy, querypos=1, expanded))))",

"children":

[

{

"type":"AND",

"description":"AND(fields=(title), KEYWORD(wayne, querypos=1, expanded))",

"fields":["title"],

"max_field_pos":0,

"children":

[

{

"type":"KEYWORD",

"word":"wayne",

"querypos":1,

"expanded":true

}

]

},

{

"type":"OR",

"description":"OR( AND(fields=(title), KEYWORD(ways, querypos=1, expanded)), AND(fields=(title), KEYWORD(wayyy, querypos=1, expanded)))",

"children":

[

{

"type":"AND",

"description":"AND(fields=(title), KEYWORD(ways, querypos=1, expanded))",

"fields":["title"],

"max_field_pos":0,

"children":

[

{

"type":"KEYWORD",

"word":"ways",

"querypos":1,

"expanded":true

}

]

},

{

"type":"AND",

"description":"AND(fields=(title), KEYWORD(wayyy, querypos=1, expanded))",

"fields":["title"],

"max_field_pos":0,

"children":

[

{

"type":"KEYWORD",

"word":"wayyy",

"querypos":1,

"expanded":true

}

]

}

]

}

]

},

{

"type":"AND",

"description":"AND(fields=(title), KEYWORD(way, querypos=1, expanded))",

"fields":["title"],

"max_field_pos":0,

"children":

[

{

"type":"KEYWORD",

"word":"way",

"querypos":1,

"expanded":true

}

]

},

{

"type":"OR",

"description":"OR(fields=(title), KEYWORD(way*, querypos=1, expanded))",

"fields":["title"],

"max_field_pos":0,

"children":

[

{

"type":"KEYWORD",

"word":"way*",

"querypos":1,

"expanded":true

}

]

}

]

},

{

"type":"AND",

"description":"AND(fields=(content), KEYWORD(hey, querypos=2))",

"fields":["content"],

"max_field_pos":0,

"children":

[

{

"type":"KEYWORD",

"word":"hey",

"querypos":2

}

]

}

]

}

}

}

PHP

$result = $index->search('@title way* @content hey')->setSource(['excludes'=>['*']])->setLimit(1)->profile()->get();

print_r($result->getProfile());

Array

(

[query] => Array

(

[type] => AND

[description] => AND( OR( OR( AND(fields=(title), KEYWORD(wayne, querypos=1, expanded)), OR( AND(fields=(title), KEYWORD(ways, querypos=1, expanded)), AND(fields=(title), KEYWORD(wayyy, querypos=1, expanded)))), AND(fields=(title), KEYWORD(way, querypos=1, expanded)), OR(fields=(title), KEYWORD(way*, querypos=1, expanded))), AND(fields=(content), KEYWORD(hey, querypos=2)))

[children] => Array

(

[0] => Array

(

[type] => OR

[description] => OR( OR( AND(fields=(title), KEYWORD(wayne, querypos=1, expanded)), OR( AND(fields=(title), KEYWORD(ways, querypos=1, expanded)), AND(fields=(title), KEYWORD(wayyy, querypos=1, expanded)))), AND(fields=(title), KEYWORD(way, querypos=1, expanded)), OR(fields=(title), KEYWORD(way*, querypos=1, expanded)))

[children] => Array

(

[0] => Array

(

[type] => OR

[description] => OR( AND(fields=(title), KEYWORD(wayne, querypos=1, expanded)), OR( AND(fields=(title), KEYWORD(ways, querypos=1, expanded)), AND(fields=(title), KEYWORD(wayyy, querypos=1, expanded))))

[children] => Array

(

[0] => Array

(

[type] => AND

[description] => AND(fields=(title), KEYWORD(wayne, querypos=1, expanded))

[fields] => Array

(

[0] => title

)

[max_field_pos] => 0

[children] => Array

(

[0] => Array

(

[type] => KEYWORD

[word] => wayne

[querypos] => 1

[expanded] => 1

)

)

)

[1] => Array

(

[type] => OR

[description] => OR( AND(fields=(title), KEYWORD(ways, querypos=1, expanded)), AND(fields=(title), KEYWORD(wayyy, querypos=1, expanded)))

[children] => Array

(

[0] => Array

(

[type] => AND

[description] => AND(fields=(title), KEYWORD(ways, querypos=1, expanded))

[fields] => Array

(

[0] => title

)

[max_field_pos] => 0

[children] => Array

(

[0] => Array

(

[type] => KEYWORD

[word] => ways

[querypos] => 1

[expanded] => 1

)

)

)

[1] => Array

(

[type] => AND

[description] => AND(fields=(title), KEYWORD(wayyy, querypos=1, expanded))

[fields] => Array

(

[0] => title

)

[max_field_pos] => 0

[children] => Array

(

[0] => Array

(

[type] => KEYWORD

[word] => wayyy

[querypos] => 1

[expanded] => 1

)

)

)

)

)

)

)

[1] => Array

(

[type] => AND

[description] => AND(fields=(title), KEYWORD(way, querypos=1, expanded))

[fields] => Array

(

[0] => title

)

[max_field_pos] => 0

[children] => Array

(

[0] => Array

(

[type] => KEYWORD

[word] => way

[querypos] => 1

[expanded] => 1

)

)

)

[2] => Array

(

[type] => OR

[description] => OR(fields=(title), KEYWORD(way*, querypos=1, expanded))

[fields] => Array

(

[0] => title

)

[max_field_pos] => 0

[children] => Array

(

[0] => Array

(

[type] => KEYWORD

[word] => way*

[querypos] => 1

[expanded] => 1

)

)

)

)

)

[1] => Array

(

[type] => AND

[description] => AND(fields=(content), KEYWORD(hey, querypos=2))

[fields] => Array

(

[0] => content

)

[max_field_pos] => 0

[children] => Array

(

[0] => Array

(

[type] => KEYWORD

[word] => hey

[querypos] => 2

)

)

)

)

)

)

Python

Python

searchApi.search({"index":"forum","query":{"query_string":"@title way* @content hey"},"_source":{"excludes":["*"]},"limit":1,"profile":true})

{'hits': {'hits': [{u'_id': u'2811025403043381551',

u'_score': 2643,

u'_source': {}}],

'total': 1},

'profile': {u'query': {u'children': [{u'children': [{u'expanded': True,

u'querypos': 1,

u'type': u'KEYWORD',

u'word': u'way*'}],

u'description': u'AND(fields=(title), KEYWORD(way*, querypos=1, expanded))',

u'fields': [u'title'],

u'type': u'AND'},

{u'children': [{u'querypos': 2,

u'type': u'KEYWORD',

u'word': u'hey'}],

u'description': u'AND(fields=(content), KEYWORD(hey, querypos=2))',

u'fields': [u'content'],

u'type': u'AND'}],

u'description': u'AND( AND(fields=(title), KEYWORD(way*, querypos=1, expanded)), AND(fields=(content), KEYWORD(hey, querypos=2)))',

u'type': u'AND'}},

'timed_out': False,

'took': 0}

javascript

javascript

res = await searchApi.search({"index":"forum","query":{"query_string":"@title way* @content hey"},"_source":{"excludes":["*"]},"limit":1,"profile":true});

{"hits": {"hits": [{"_id": "2811025403043381551",

"_score": 2643,

"_source": {}}],

"total": 1},

"profile": {"query": {"children": [{"children": [{"expanded": True,

"querypos": 1,

"type": "KEYWORD",

"word": "way*"}],

"description": "AND(fields=(title), KEYWORD(way*, querypos=1, expanded))",

"fields": ["title"],

"type": "AND"},

{"children": [{"querypos": 2,

"type": "KEYWORD",

"word": "hey"}],

"description": "AND(fields=(content), KEYWORD(hey, querypos=2))",

"fields": ["content"],

"type": "AND"}],

"description": "AND( AND(fields=(title), KEYWORD(way*, querypos=1, expanded)), AND(fields=(content), KEYWORD(hey, querypos=2)))",

"type": "AND"}},

"timed_out": False,

"took": 0}

java

Java

query = new HashMap<String,Object>();

query.put("query_string","@title way* @content hey");

searchRequest = new SearchRequest();

searchRequest.setIndex("forum");

searchRequest.setQuery(query);

searchRequest.setProfile(true);

searchRequest.setLimit(1);

searchRequest.setSort(new ArrayList<String>(){{

add("*");

}});

searchResponse = searchApi.search(searchRequest);

class SearchResponse {

took: 18

timedOut: false

hits: class SearchResponseHits {

total: 1

hits: [{_id=2811025403043381551, _score=2643, _source={}}]

aggregations: null

}

profile: {query={type=AND, description=AND( AND(fields=(title), KEYWORD(way*, querypos=1, expanded)), AND(fields=(content), KEYWORD(hey, querypos=2))), children=[{type=AND, description=AND(fields=(title), KEYWORD(way*, querypos=1, expanded)), fields=[title], children=[{type=KEYWORD, word=way*, querypos=1, expanded=true}]}, {type=AND, description=AND(fields=(content), KEYWORD(hey, querypos=2)), fields=[content], children=[{type=KEYWORD, word=hey, querypos=2}]}]}}

}

C#

C#

object query = new { query_string="@title way* @content hey" };

var searchRequest = new SearchRequest("forum", query);

searchRequest.Profile = true;

searchRequest.Limit = 1;

searchRequest.Sort = new List<Object> { "*" };

var searchResponse = searchApi.Search(searchRequest);

class SearchResponse {

took: 18

timedOut: false

hits: class SearchResponseHits {

total: 1

hits: [{_id=2811025403043381551, _score=2643, _source={}}]

aggregations: null

}

profile: {query={type=AND, description=AND( AND(fields=(title), KEYWORD(way*, querypos=1, expanded)), AND(fields=(content), KEYWORD(hey, querypos=2))), children=[{type=AND, description=AND(fields=(title), KEYWORD(way*, querypos=1, expanded)), fields=[title], children=[{type=KEYWORD, word=way*, querypos=1, expanded=true}]}, {type=AND, description=AND(fields=(content), KEYWORD(hey, querypos=2)), fields=[content], children=[{type=KEYWORD, word=hey, querypos=2}]}]}}

}

TypeScript

TypeScript

res = await searchApi.search({

index: 'test',

query: { query_string: '@content 1'},

_source: { excludes: ["*"] },

limit:1,

profile":true

});

{

"hits":

{

"hits":

[{

"_id": "1",

"_score": 1480,

"_source": {}

}],

"total": 1

},

"profile":

{

"query":

{

"children":

[{

"children":

[{

"expanded": True,

"querypos": 1,

"type": "KEYWORD",

"word": "1*"

}],

"description": "AND(fields=(content), KEYWORD(1*, querypos=1, expanded))",

"fields": ["content"],

"type": "AND"

}],

"description": "AND(fields=(content), KEYWORD(1*, querypos=1))",

"type": "AND"

}},

"timed_out": False,

"took": 0

}

Go

Go

searchRequest := manticoresearch.NewSearchRequest("test")

query := map[string]interface{} {"query_string": "1*"}

source := map[string]interface{} { "excludes": []string {"*"} }

searchRequest.SetQuery(query)

searchRequest.SetSource(source)

searchReq.SetLimit(1)

searchReq.SetProfile(true)

res, _, _ := apiClient.SearchAPI.Search(context.Background()).SearchRequest(*searchRequest).Execute()

{

"hits":

{

"hits":

[{

"_id": "1",

"_score": 1480,

"_source": {}

}],

"total": 1

},

"profile":

{

"query":

{

"children":

[{

"children":

[{

"expanded": True,

"querypos": 1,

"type": "KEYWORD",

"word": "1*"

}],

"description": "AND(fields=(content), KEYWORD(1*, querypos=1, expanded))",

"fields": ["content"],

"type": "AND"

}],

"description": "AND(fields=(content), KEYWORD(1*, querypos=1))",

"type": "AND"

}},

"timed_out": False,

"took": 0

}

Profiling without running a query

The SQL statement EXPLAIN QUERY enables the display of the execution tree for a given full-text query without performing an actual search query on the table.

SQL

EXPLAIN QUERY index_base '@title running @body dog'\G

EXPLAIN QUERY index_base '@title running @body dog'\G

*************************** 1\. row ***************************

Variable: transformed_tree

Value: AND(

OR(

AND(fields=(title), KEYWORD(run, querypos=1, morphed)),

AND(fields=(title), KEYWORD(running, querypos=1, morphed))))

AND(fields=(body), KEYWORD(dog, querypos=2, morphed)))

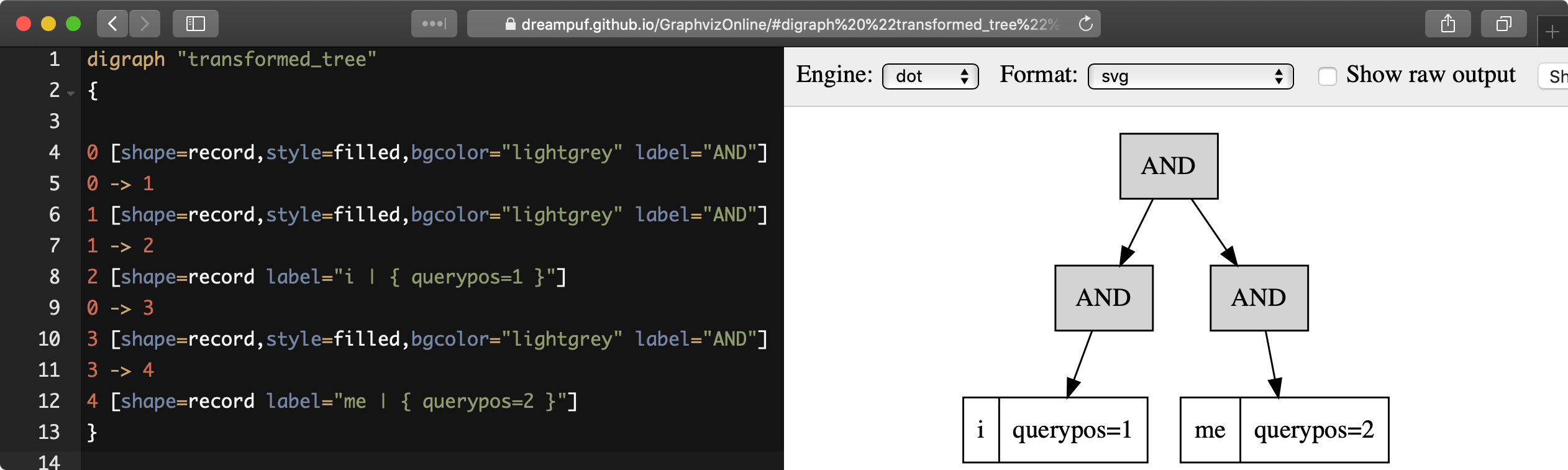

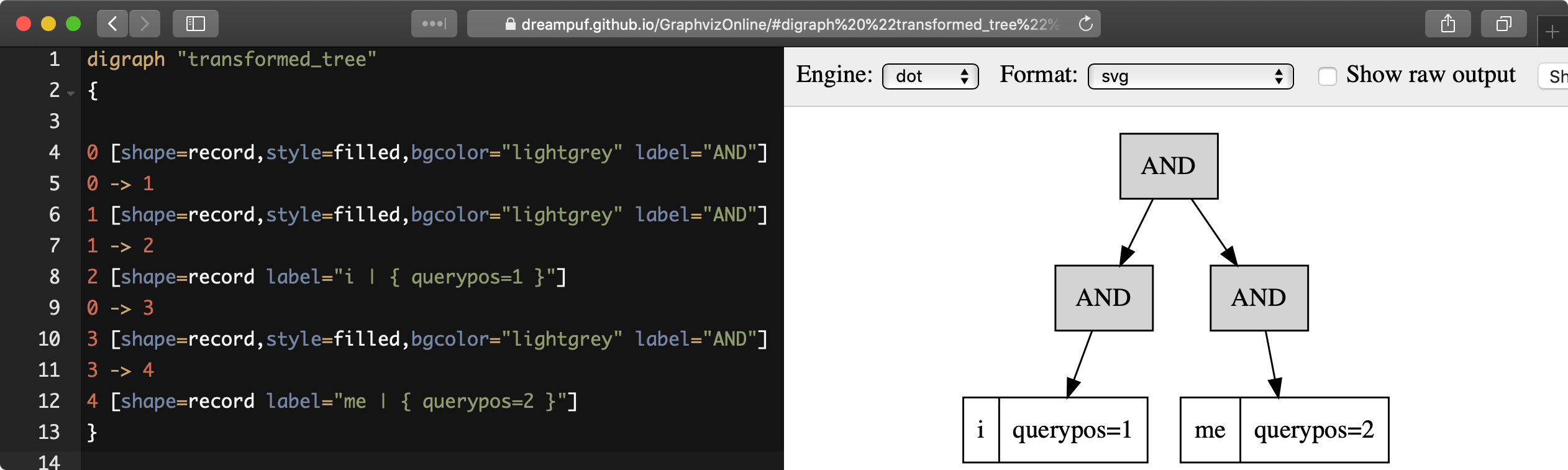

EXPLAIN QUERY ... option format=dot allows displaying the execution tree of a provided full-text query in a hierarchical format suitable for visualization by existing tools, such as https://dreampuf.github.io/GraphvizOnline:

SQL

EXPLAIN QUERY tbl 'i me' option format=dot\G

EXPLAIN QUERY tbl 'i me' option format=dot\G

*************************** 1. row ***************************

Variable: transformed_tree

Value: digraph "transformed_tree"

{

0 [shape=record,style=filled,bgcolor="lightgrey" label="AND"]

0 -> 1

1 [shape=record,style=filled,bgcolor="lightgrey" label="AND"]

1 -> 2

2 [shape=record label="i | { querypos=1 }"]

0 -> 3

3 [shape=record,style=filled,bgcolor="lightgrey" label="AND"]

3 -> 4

4 [shape=record label="me | { querypos=2 }"]

}

Viewing the match factors values

When using an expression ranker, it's possible to reveal the values of the calculated factors with the PACKEDFACTORS() function.

The function returns:

- The values of document-level factors (such as bm25, field_mask, doc_word_count)

- A list of each field that generated a hit (including lcs, hit_count, word_count, sum_idf, min_hit_pos, etc.)

- A list of each keyword from the query along with their tf and idf values

These values can be utilized to understand why certain documents receive lower or higher scores in a search or to refine the existing ranking expression.

Example:

SQL

SELECT id, PACKEDFACTORS() FROM test1 WHERE MATCH('test one') OPTION ranker=expr('1')\G

id: 1

packedfactors(): bm25=569, bm25a=0.617197, field_mask=2, doc_word_count=2,

field1=(lcs=1, hit_count=2, word_count=2, tf_idf=0.152356,

min_idf=-0.062982, max_idf=0.215338, sum_idf=0.152356, min_hit_pos=4,

min_best_span_pos=4, exact_hit=0, max_window_hits=1, min_gaps=2,

exact_order=1, lccs=1, wlccs=0.215338, atc=-0.003974),

word0=(tf=1, idf=-0.062982),

word1=(tf=1, idf=0.215338)

1 row in set (0.00 sec)

Queries can be automatically optimized if OPTION boolean_simplify=1 is specified. Some transformations performed by this optimization include:

- Excess brackets:

((A | B) | C) becomes (A | B | C); ((A B) C) becomes (A B C)

- Excess AND NOT:

((A !N1) !N2) becomes (A !(N1 | N2))

- Common NOT:

((A !N) | (B !N)) becomes ((A | B) !N)

- Common Compound NOT:

((A !(N AA)) | (B !(N BB))) becomes (((A | B) !N) | (A !AA) | (B !BB)) if the cost of evaluating N is greater than the sum of evaluating A and B

- Common subterm:

((A (N | AA)) | (B (N | BB))) becomes (((A | B) N) | (A AA) | (B BB)) if the cost of evaluating N is greater than the sum of evaluating A and B

- Common keywords:

(A | "A B"~N) becomes A; ("A B" | "A B C") becomes "A B"; ("A B"~N | "A B C"~N) becomes ("A B"~N)

- Common phrase:

("X A B" | "Y A B") becomes ("("X"|"Y") A B")

- Common AND NOT:

((A !X) | (A !Y) | (A !Z)) becomes (A !(X Y Z))

- Common OR NOT:

((A !(N | N1)) | (B !(N | N2))) becomes (( (A !N1) | (B !N2) ) !N)

Note that optimizing queries consumes CPU time, so for simple queries or hand-optimized queries, you'll achieve better results with the default boolean_simplify=0 value. Simplifications often benefit complex queries or algorithmically generated queries.

Queries like -dog, which could potentially include all documents from the collection are not allowed by default. To allow them, you must specify not_terms_only_allowed=1 either as a global setting or as a search option.

SQL

When you run a query via SQL over the MySQL protocol, you receive the requested columns as a result or an empty result set if nothing is found.

SQL

+------+------+--------+

| id | age | name |

+------+------+--------+

| 1 | 25 | joe |

| 2 | 25 | mary |

| 3 | 33 | albert |

+------+------+--------+

3 rows in set (0.00 sec)

Additionally, you can use the SHOW META call to see extra meta-information about the latest query.

SQL

SELECT id,story_author,comment_author FROM hn_small WHERE story_author='joe' LIMIT 3; SHOW META;

++--------+--------------+----------------+

| id | story_author | comment_author |

+--------+--------------+----------------+

| 152841 | joe | SwellJoe |

| 161323 | joe | samb |

| 163735 | joe | jsjenkins168 |

+--------+--------------+----------------+

3 rows in set (0.01 sec)

+----------------+-------+

| Variable_name | Value |

+----------------+-------+

| total | 3 |

| total_found | 20 |

| total_relation | gte |

| time | 0.010 |

+----------------+-------+

4 rows in set (0.00 sec)

In some cases, such as when performing a faceted search, you may receive multiple result sets as a response to your SQL query.

SQL

SELECT * FROM tbl WHERE MATCH('joe') FACET age;

+------+------+

| id | age |

+------+------+

| 1 | 25 |

+------+------+

1 row in set (0.00 sec)

+------+----------+

| age | count(*) |

+------+----------+

| 25 | 1 |

+------+----------+

1 row in set (0.00 sec)

In case of a warning, the result set will include a warning flag, and you can see the warning using SHOW WARNINGS.

SQL

SELECT * from tbl where match('"joe"/3'); show warnings;

+------+------+------+

| id | age | name |

+------+------+------+

| 1 | 25 | joe |

+------+------+------+

1 row in set, 1 warning (0.00 sec)

+---------+------+--------------------------------------------------------------------------------------------+

| Level | Code | Message |

+---------+------+--------------------------------------------------------------------------------------------+

| warning | 1000 | quorum threshold too high (words=1, thresh=3); replacing quorum operator with AND operator |

+---------+------+--------------------------------------------------------------------------------------------+

1 row in set (0.00 sec)

If your query fails, you will receive an error:

SQL

SELECT * from tbl where match('@surname joe');

ERROR 1064 (42000): index idx: query error: no field 'surname' found in schema

HTTP

Via the HTTP JSON interface, the query result is sent as a JSON document. Example:

{

"took":10,

"timed_out": false,

"hits":

{

"total": 2,

"hits":

[

{

"_id": "1",

"_score": 1,

"_source": { "gid": 11 }

},

{

"_id": "2",

"_score": 1,

"_source": { "gid": 12 }

}

]

}

}

took: time in milliseconds it took to execute the searchtimed_out: whether the query timed out or nothits: search results, with the following properties:total: total number of matching documentshits: an array containing matches

The query result can also include query profile information. See Query profile.

Each match in the hits array has the following properties:

_id: match id_score: match weight, calculated by the ranker_source: an array containing the attributes of this match

Source selection

By default, all attributes are returned in the _source array. You can use the _source property in the request payload to select the fields you want to include in the result set. Example:

{

"index":"test",

"_source":"attr*",

"query": { "match_all": {} }

}

You can specify the attributes you want to include in the query result as a string ("_source": "attr*") or as an array of strings ("_source": [ "attr1", "attri*" ]"). Each entry can be an attribute name or a wildcard (*, % and ? symbols are supported).

You can also explicitly specify which attributes you want to include and which to exclude from the result set using the includes and excludes properties:

"_source":

{

"includes": [ "attr1", "attri*" ],

"excludes": [ "*desc*" ]

}

An empty list of includes is interpreted as "include all attributes," while an empty list of excludes does not match anything. If an attribute matches both the includes and excludes, then the excludes win.

WHERE

WHERE is an SQL clause that works for both full-text matching and additional filtering. The following operators are available:

- Comparison operators

<, >, <=, >=, =, <>, BETWEEN, IN, IS NULL

- Boolean operators

AND, OR, NOT

MATCH('query') is supported and maps to a full-text query.

The {col_name | expr_alias} [NOT] IN @uservar condition syntax is supported. Refer to the SET syntax for a description of global user variables.

HTTP JSON

If you prefer the HTTP JSON interface, you can also apply filtering. It might seem more complex than SQL, but it is recommended for cases when you need to prepare a query programmatically, such as when a user fills out a form in your application.

Here's an example of several filters in a bool query.

This full-text query matches all documents containing product in any field. These documents must have a price greater than or equal to 500 (gte) and less than or equal to 1000 (lte). All of these documents must not have a revision less than 15 (lt).

JSON

POST /search

{

"index": "test1",

"query": {

"bool": {

"must": [

{ "match" : { "_all" : "product" } },

{ "range": { "price": { "gte": 500, "lte": 1000 } } }

],

"must_not": {

"range": { "revision": { "lt": 15 } }

}

}

}

}

bool query

The bool query matches documents based on boolean combinations of other queries and/or filters. Queries and filters must be specified in must, should, or must_not sections and can be nested.

JSON

POST /search

{

"index":"test1",

"query": {

"bool": {

"must": [

{ "match": {"_all":"keyword"} },

{ "range": { "revision": { "gte": 14 } } }

]

}

}

}

must

Queries and filters specified in the must section are required to match the documents. If multiple fulltext queries or filters are specified, all of them must match. This is the equivalent of AND queries in SQL. Note that if you want to match against an array (multi-value attribute), you can specify the attribute multiple times. The result will be positive only if all the queried values are found in the array, e.g.:

"must": [

{"equals" : { "product_codes": 5 }},

{"equals" : { "product_codes": 6 }}

]

Note also, it may be better in terms of performance to use:

{"in" : { "all(product_codes)": [5,6] }}

(see details below).

should

Queries and filters specified in the should section should match the documents. If some queries are specified in must or must_not, should queries are ignored. On the other hand, if there are no queries other than should, then at least one of these queries must match a document for it to match the bool query. This is the equivalent of OR queries. Note, if you want to match against an array (multi-value attribute) you can specify the attribute multiple times, e.g.:

"should": [

{"equals" : { "product_codes": 7 }},

{"equals" : { "product_codes": 8 }}

]

Note also, it may be better in terms of performance to use:

{"in" : { "any(product_codes)": [7,8] }}

(see details below).

must_not

Queries and filters specified in the must_not section must not match the documents. If several queries are specified under must_not, the document matches if none of them match.

JSON

POST /search

{

"index":"t",

"query": {

"bool": {

"should": [

{

"equals": {

"b": 1

}

},

{

"equals": {

"b": 3

}

}

],

"must": [

{

"equals": {

"a": 1

}

}

],

"must_not": {

"equals": {

"b": 2

}

}

}

}

}

Nested bool query

A bool query can be nested inside another bool so you can make more complex queries. To make a nested boolean query just use another bool instead of must, should or must_not. Here is how this query:

a = 2 and (a = 10 or b = 0)

should be presented in JSON.

JSON

a = 2 and (a = 10 or b = 0)

POST /search

{

"index":"t",

"query": {

"bool": {

"must": [

{

"equals": {

"a": 2

}

},

{

"bool": {

"should": [

{

"equals": {

"a": 10

}

},

{

"equals": {

"b": 0

}

}

]

}

}

]

}

}

}

More complex query:

(a = 1 and b = 1) or (a = 10 and b = 2) or (b = 0)

JSON

(a = 1 and b = 1) or (a = 10 and b = 2) or (b = 0)

POST /search

{

"index":"t",

"query": {

"bool": {

"should": [

{

"bool": {

"must": [

{

"equals": {

"a": 1

}

},

{

"equals": {

"b": 1

}

}

]

}

},

{

"bool": {

"must": [

{

"equals": {

"a": 10

}

},

{

"equals": {

"b": 2

}

}

]

}

},

{

"bool": {

"must": [

{

"equals": {

"b": 0

}

}

]

}

}

]

}

}

}

Queries in SQL format (query_string) can also be used in bool queries.

JSON

POST /search

{

"index": "test1",

"query": {

"bool": {

"must": [

{ "query_string" : "product" },

{ "query_string" : "good" }

]

}

}

}

Various filters

Equality filters

Equality filters are the simplest filters that work with integer, float and string attributes.

JSON

POST /search

{

"index":"test1",

"query": {

"equals": { "price": 500 }

}

}

Filter equals can be applied to a multi-value attribute and you can use:

any() which will be positive if the attribute has at least one value which equals to the queried value;all() which will be positive if the attribute has a single value and it equals to the queried value

JSON

POST /search

{

"index":"test1",

"query": {

"equals": { "any(price)": 100 }

}

}

Set filters

Set filters check if attribute value is equal to any of the values in the specified set.

Set filters support integer, string and multi-value attributes.

JSON

POST /search

{

"index":"test1",

"query": {

"in": {

"price": [1,10,100]

}

}

}

When applied to a multi-value attribute you can use:

any() (equivalent to no function) which will be positive if there's at least one match between the attribute values and the queried values;all() which will be positive if all the attribute values are in the queried set

JSON

POST /search

{

"index":"test1",

"query": {

"in": {

"all(price)": [1,10]

}

}

}

Range filters

Range filters match documents that have attribute values within a specified range.

Range filters support the following properties:

gte: greater than or equal togt: greater thanlte: less than or equal tolt: less than

JSON

POST /search

{

"index":"test1",

"query": {

"range": {

"price": {

"gte": 500,

"lte": 1000

}

}

}

}

Geo distance filters

geo_distance filters are used to filter the documents that are within a specific distance from a geo location.

Specifies the pin location, in degrees. Distances are calculated from this point.

Specifies the attributes that contain latitude and longitude.

Specifies distance calculation function. Can be either adaptive or haversine. adaptive is faster and more precise, for more details see GEODIST(). Optional, defaults to adaptive.

Specifies the maximum distance from the pin locations. All documents within this distance match. The distance can be specified in various units. If no unit is specified, the distance is assumed to be in meters. Here is a list of supported distance units:

- Meter:

m or meters

- Kilometer:

km or kilometers

- Centimeter:

cm or centimeters

- Millimeter:

mm or millimeters

- Mile:

mi or miles

- Yard:

yd or yards

- Feet:

ft or feet

- Inch:

in or inch

- Nautical mile:

NM, nmi or nauticalmiles

location_anchor and location_source properties accept the following latitude/longitude formats:

- an object with lat and lon keys:

{ "lat": "attr_lat", "lon": "attr_lon" }

- a string of the following structure:

"attr_lat, attr_lon"

- an array with the latitude and longitude in the following order:

[attr_lon, attr_lat]

Latitude and longitude are specified in degrees.

Basic example

POST /search

{

"index":"test",

"query": {

"geo_distance": {

"location_anchor": {"lat":49, "lon":15},

"location_source": {"attr_lat, attr_lon"},

"distance_type": "adaptive",

"distance":"100 km"

}

}

}

Advanced example

`geo_distance` can be used as a filter in bool queries along with matches or other attribute filters.

POST /search

{

"index": "geodemo",

"query": {

"bool": {

"must": [

{

"match": {

"*": "station"

}

},

{

"equals": {

"state_code": "ENG"

}

},

{

"geo_distance": {

"distance_type": "adaptive",

"location_anchor": {

"lat": 52.396,

"lon": -1.774

},

"location_source": "latitude_deg,longitude_deg",

"distance": "10000 m"

}

}

]

}

}

}

Manticore enables the use of arbitrary arithmetic expressions through both SQL and HTTP, incorporating attribute values, internal attributes (document ID and relevance weight), arithmetic operations, several built-in functions, and user-defined functions. Below is the complete reference list for quick access.

Arithmetic operators

Standard arithmetic operators are available. Arithmetic calculations involving these operators can be executed in three different modes:

- using single-precision, 32-bit IEEE 754 floating point values (default),

- using signed 32-bit integers,

- using 64-bit signed integers.

The expression parser automatically switches to integer mode if no operations result in a floating point value. Otherwise, it uses the default floating point mode. For example, a+b will be computed using 32-bit integers if both arguments are 32-bit integers; or using 64-bit integers if both arguments are integers but one of them is 64-bit; or in floats otherwise. However, a/b or sqrt(a) will always be computed in floats, as these operations return a non-integer result. To avoid this, you can use IDIV(a,b) or a DIV b form. Additionally, a*b will not automatically promote to 64-bit when arguments are 32-bit. To enforce 64-bit results, use BIGINT(), but note that if non-integer operations are present, BIGINT() will simply be ignored.

Comparison operators

The comparison operators return 1.0 when the condition is true and 0.0 otherwise. For example, (a=b)+3 evaluates to 4 when attribute a is equal to attribute b, and to 3 when a is not. Unlike MySQL, the equality comparisons (i.e., = and <> operators) include a small equality threshold (1e-6 by default). If the difference between the compared values is within the threshold, they are considered equal.

The BETWEEN and IN operators, in the case of multi-value attributes, return true if at least one value matches the condition (similar to ANY()). The IN operator does not support JSON attributes. The IS (NOT) NULL operator is supported only for JSON attributes.

Boolean operators

Boolean operators (AND, OR, NOT) behave as expected. They are left-associative and have the lowest priority compared to other operators. NOT has higher priority than AND and OR but still less than any other operator. AND and OR share the same priority, so using parentheses is recommended to avoid confusion in complex expressions.

Bitwise operators

These operators perform bitwise AND and OR respectively. The operands must be of integer types.

Functions:

Expressions in HTTP JSON

In the HTTP JSON interface, expressions are supported via script_fields and expressions.

script_fields

{

"index": "test",

"query": {

"match_all": {}

}, "script_fields": {

"add_all": {

"script": {

"inline": "( gid * 10 ) | crc32(title)"

}

},

"title_len": {

"script": {

"inline": "crc32(title)"

}

}

}

}

In this example, two expressions are created: add_all and title_len. The first expression calculates ( gid * 10 ) | crc32(title) and stores the result in the add_all attribute. The second expression calculates crc32(title) and stores the result in the title_len attribute.

Currently, only inline expressions are supported. The value of the inline property (the expression to compute) has the same syntax as SQL expressions.

The expression name can be utilized in filtering or sorting.

script_fields

{

"index":"movies_rt",

"script_fields":{

"cond1":{

"script":{

"inline":"actor_2_facebook_likes =296 OR movie_facebook_likes =37000"

}

},

"cond2":{

"script":{

"inline":"IF (IN (content_rating,'TV-PG','PG'),2, IF(IN(content_rating,'TV-14','PG-13'),1,0))"

}

}

},

"limit":10,

"sort":[

{

"cond2":"desc"

},

{

"actor_1_name":"asc"

},

{

"actor_2_name":"desc"

}

],

"profile":true,

"query":{

"bool":{

"must":[

{

"match":{

"*":"star"

}

},

{

"equals":{

"cond1":1

}

}

],

"must_not":[

{

"equals":{

"content_rating":"R"

}

}

]

}

}

}

By default, expression values are included in the _source array of the result set. If the source is selective (see Source selection), the expression name can be added to the _source parameter in the request. Note, the names of the expressions must be in lowercase.

expressions

expressions is an alternative to script_fields with a simpler syntax. The example request adds two expressions and stores the results into add_all and title_len attributes. Note, the names of the expressions must be in lowercase.

expressions

{

"index": "test",

"query": { "match_all": {} },

"expressions":

{

"add_all": "( gid * 10 ) | crc32(title)",

"title_len": "crc32(title)"

}

}

The SQL SELECT clause and the HTTP /search endpoint support a number of options that can be used to fine-tune search behavior.

OPTION

General syntax

SQL:

SELECT ... [OPTION <optionname>=<value> [ , ... ]] [/*+ [NO_][ColumnarScan|DocidIndex|SecondaryIndex(<attribute>[,...])]] /*]

HTTP:

POST /search

{

"index" : "index_name",

"options":

{

"optionname": "value",

"optionname2": <value2>

}

}

SQL:

SQL

SELECT * FROM test WHERE MATCH('@title hello @body world')

OPTION ranker=bm25, max_matches=3000,

field_weights=(title=10, body=3), agent_query_timeout=10000

+------+-------+-------+

| id | title | body |

+------+-------+-------+

| 1 | hello | world |

+------+-------+-------+

1 row in set (0.00 sec)

JSON:

JSON

POST /search

{

"index" : "test",

"query": {

"match": {

"title": "hello"

},

"match": {

"body": "world"

}

},

"options":

{

"ranker": "bm25",

"max_matches": 3000,

"field_weights": {

"title": 10,

"body": 3

},

"agent_query_timeout": 10000

}

}

{

"took": 0,

"timed_out": false,

"hits": {

"total": 1,

"total_relation": "eq",

"hits": [

{

"_id": "1",

"_score": 10500,

"_source": {

"title": "hello",

"body": "world"

}

}

]

}

}

Supported options are:

accurate_aggregation

Integer. Enables or disables guaranteed aggregate accuracy when running groupby queries in multiple threads. Default is 0.